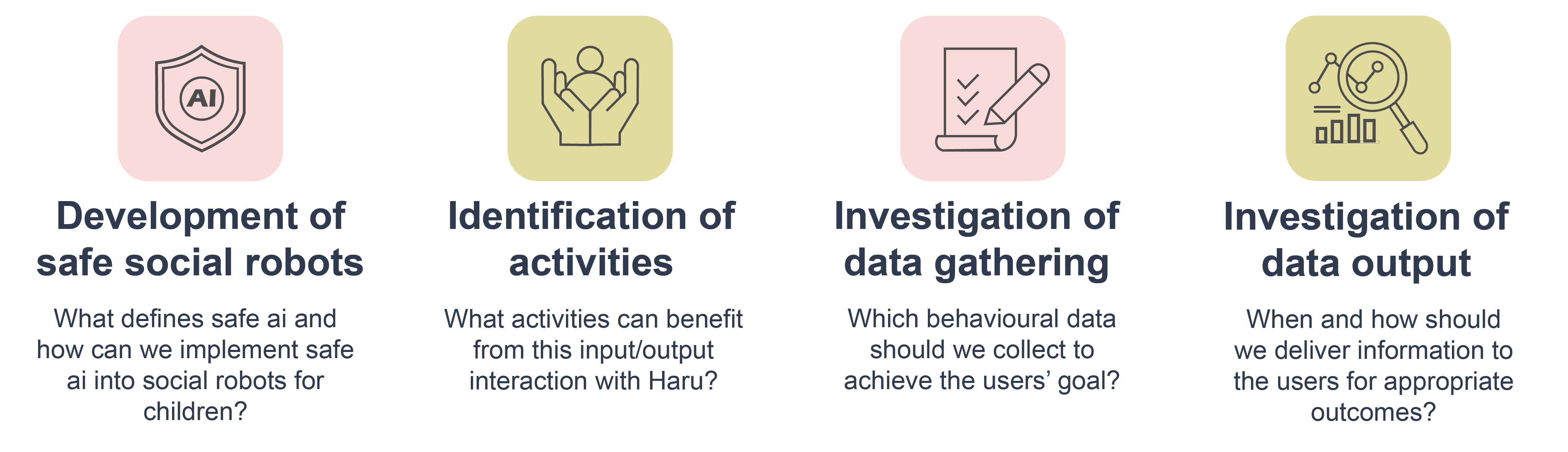

Research Goals

Video Insights

Identification of Activities

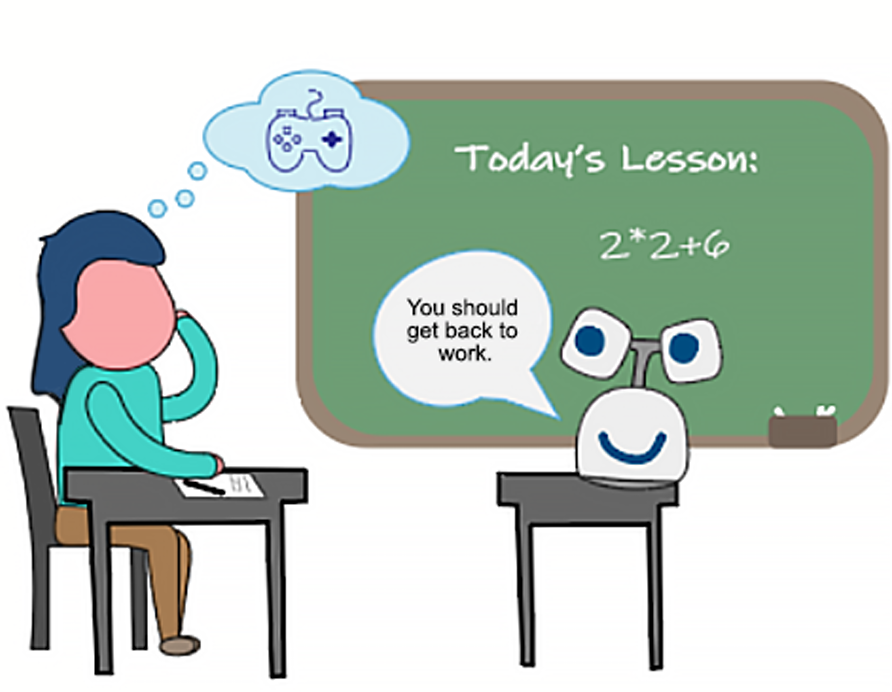

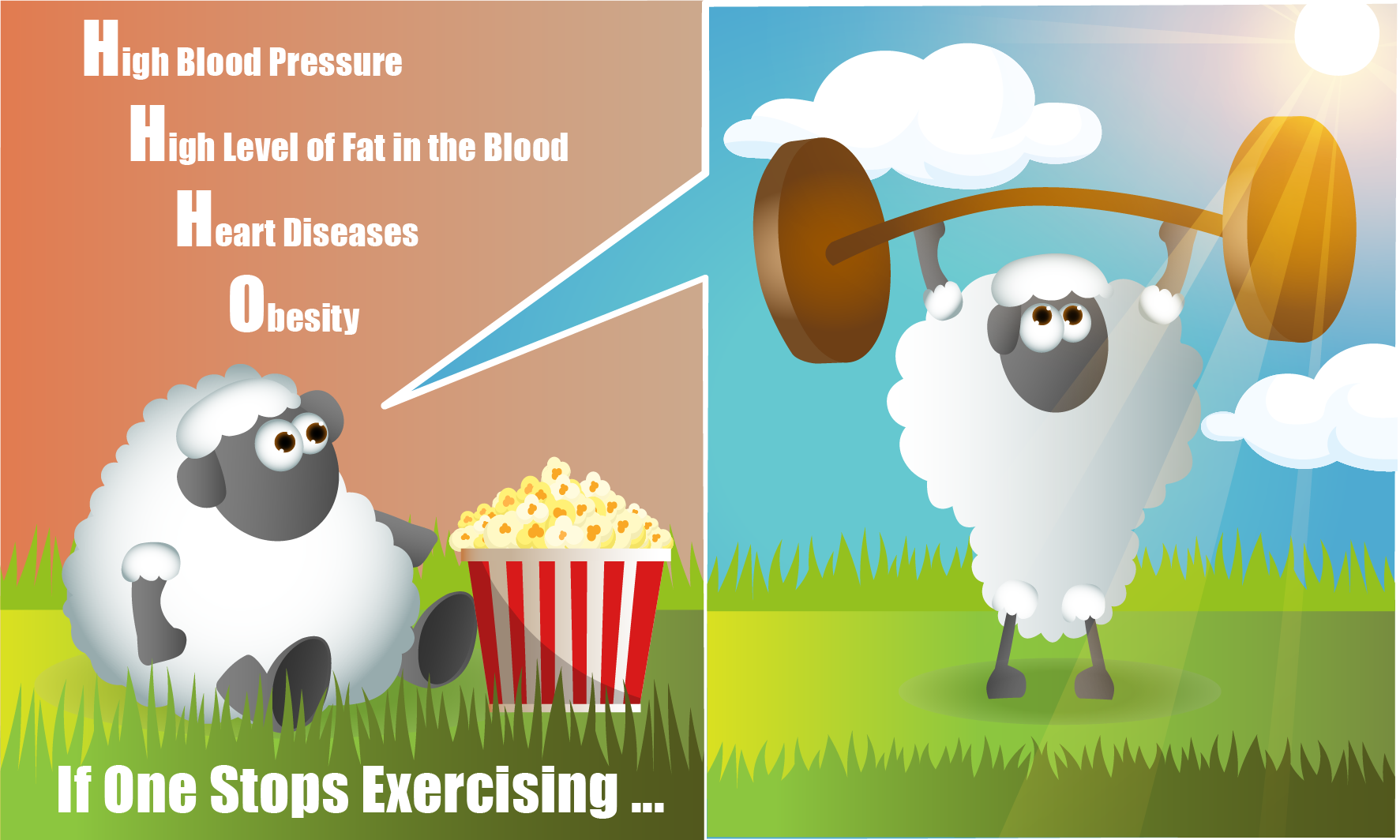

We investigate the types of activities that Haru can support in various learning environments (e.g., children distracted during class; an adolescent learning about healthy eating behaviours). We believe that some behaviours can be improved more readily than others with Haru.

Investigation of Data Gathering

We investigate how we can gather information about a person's behaviour. Which type of information could be gathered via Haru’s future functions (e.g., audio, visual, sentiment analysis). We aim to equip Haru with multisensory functions for deep human understanding.

Investigation of Data Output

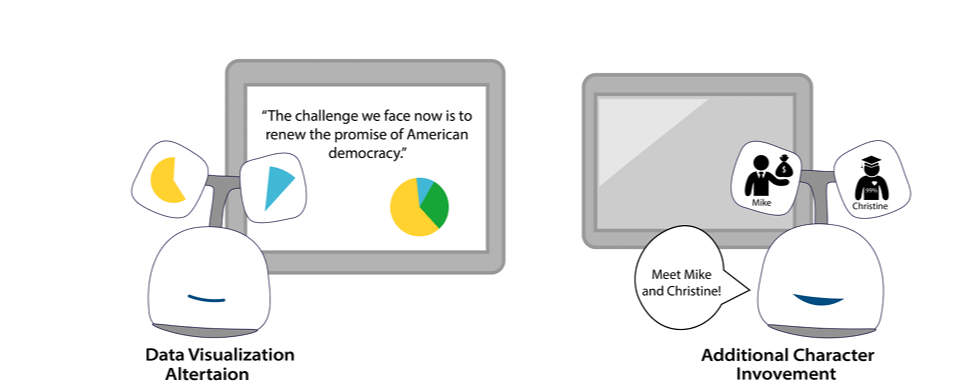

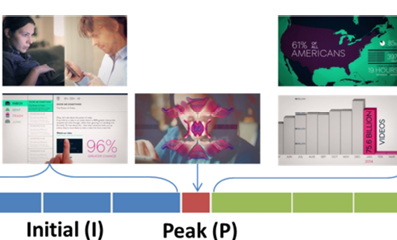

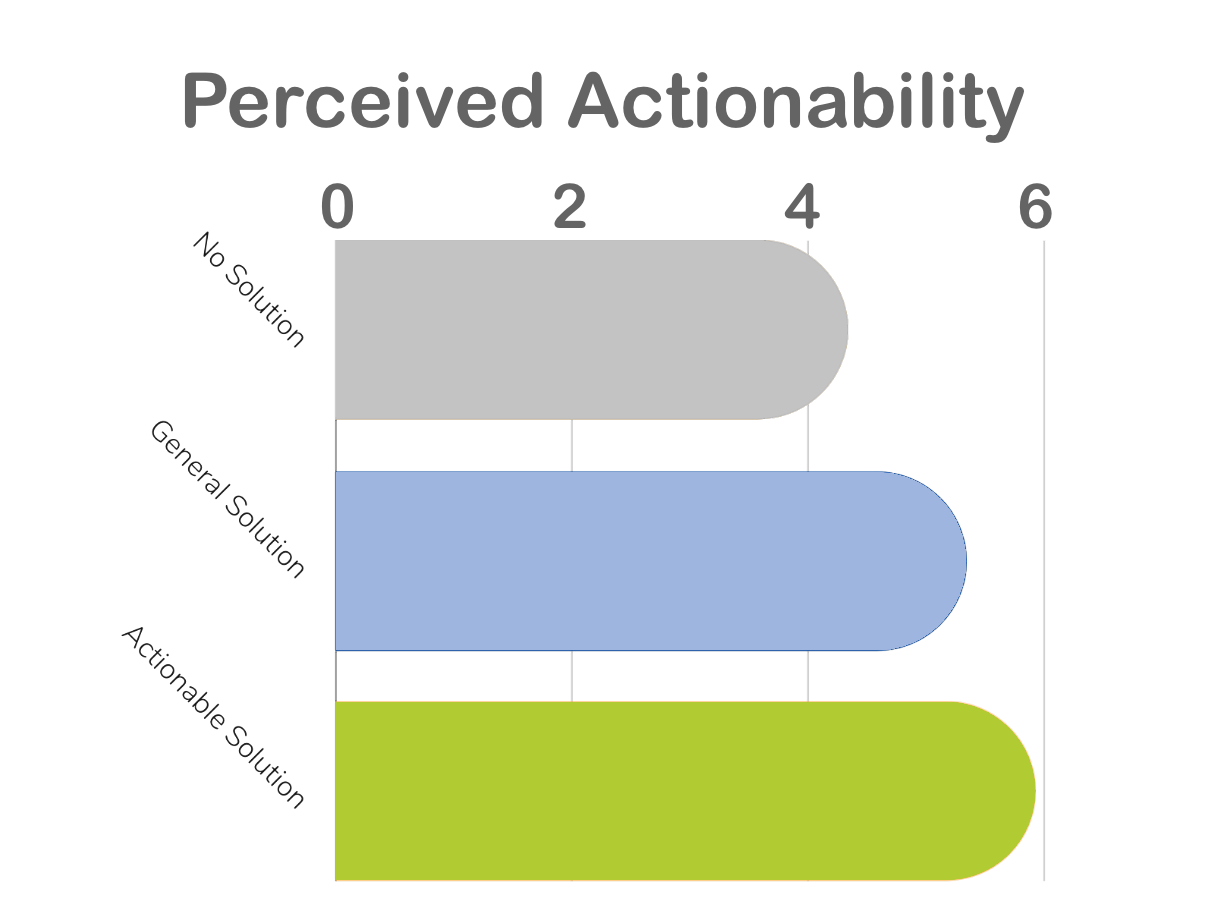

Once we gather a person's information, our goal shifts to data output. Focusing on efficient interaction between Haru and a person, we can use the collected data to allow for exploration that could improve behviour. Questions such as "How do we deliver information to people so their behaviours can be improved effectively over time?" "Can we deliver data so anyone can benefit from it?" Our current plan is to a apply data video approach for this investigation. Our study confirmed that the way we deliver messages in a video can influence the user’s perception (Sallam et al., 2022). We plan to apply this approach to deliver information to our users.

|

|

|

Deep Human Understanding for Education 4.0

For deeper human understanding in school settings, Haru will be gathering various types of information about children’s experiences. For example, EEG data will be captured to infer their potential focus, facial expressions will be captured to understand their emotion or social cues, and voice data will be also analyzed to understand their sentiment. We aim to deliver smooth communication between children and Haru.

Haru Explored: Dialogues and Interactions

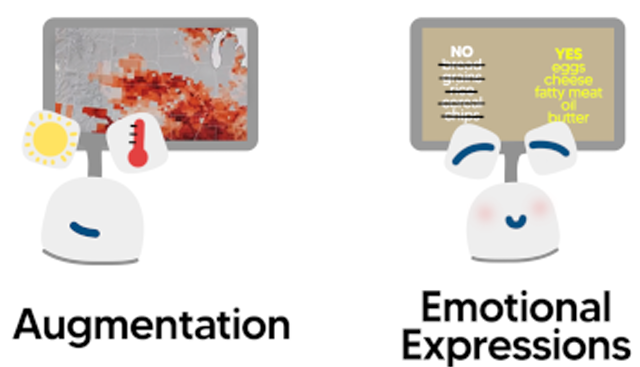

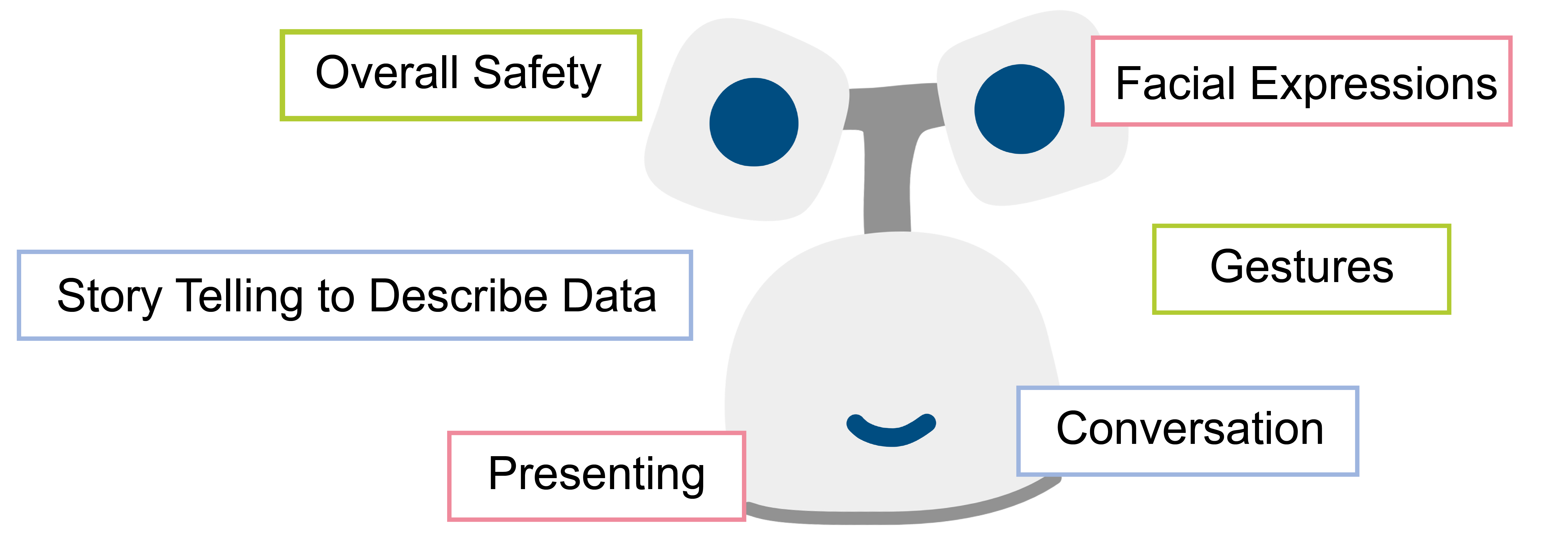

Haru has great potential, such as engaging in conversations, recognizing and expressing emotions, ensuring safety in interactions, detecting others' facial expressions, and presenting data visually.

Related Publications

Parent and Educator Concerns on the Pedagogical Use of AI-Equipped Social Robots

Francisco Perella-Holfeld, Samar Sallam, Julia Petrie, Randy Gomez, Pourang Irani, and Yumiko Sakamoto. (2024, September). Parent and Educator Concerns on the Pedagogical Use of AI-Equipped Social Robots. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies Vol.8, No. 3 (2024)

Exploring the Design of Social Robot User Interfaces for Presenting Data-Driven Stories

Anuradha Herath, Samar Sallam, Yumiko Sakamoto, Randy Gomez, and Pourang Irani. (2023, December). Exploring the Design of Social Robot User Interfaces for Presenting Data-Driven Stories. In Proceedings of the 22nd International Conference on Mobile and Ubiquitous Multimedia (MUM '23). (pp. 321-339). Association for Computing Machinery.

How Should a Social Robot Deliver Negative Feedback Without Creating Distance Between the Robot and Child Users?

Yumiko Sakamoto, Anuradha Herath, Tanvi Vuradi, Samar Sallam, Randy Gomez and Pourang Irani. (2023, December). How Should a Social Robot Deliver Negative Feedback Without Creating Distance Between the Robot and Child Users? In Proceedings of the 11th International Conference on Human-Agent Interaction (HAI '23). (pp. 325-334). Association for Computing Machinery.

Presenting Data with Social Robots: An Exploration into Conveying Data Videos using an Artificial Physical Narrator

Anuradha Herath, Samar Sallam, Tanvi Vuradi, Yumiko Sakamoto, Randy Gomez and Pourang Irani. (2023, December). Presenting Data with Social Robots: An Exploration into Conveying Data Videos using an Artificial Physical Narrator (HAI '23). (pp. 476-478). Association for Computing Machinery.

Design and Development of a Teleoperation System for Affective Tabletop Robot Haru

Vasylkiv, Y., Ma, Z., Li, G., Brock, H., Nakamura, K., Pourang, I., & Gomez, R. (2021, August). Shaping Affective Robot Haru’s Reactive Response. In 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) (pp. 989-996). IEEE.

Shaping Affective Robot Haru’s Reactive Response

Vasylkiv, Y., Ma, Z., Li, G., Brock, H., Nakamura, K., Pourang, I., & Gomez, R. (2021, August). Shaping Affective Robot Haru’s Reactive Response. In 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) (pp. 989-996). IEEE.

Automating Behavior Selection for Affective Telepresence Robot

Vasylkiv, Y., Ma, Z., Li, G., Sandry, E., Brock, H., Nakamura, K., Pourang, I. and Gomez, R., 2021, May. Automating behavior selection for affective telepresence robot. In 2021 IEEE International Conference on Robotics and Automation (ICRA) (pp. 2026-2032). IEEE.

Persuasive Data Storytelling with a Data Video during Covid-19 Infodemic: Affective Pathway to Influence the Users’ Perception about Contact Tracing Apps in less than 6 Minutes

Sakamoto Y, Sallam S, Leboe-McGowan J, Salo A, Irani P. Persuasive data storytelling with a data video during Covid-19 infodemic: Affective pathway to influence the users’ perception about contact tracing apps in less than 6 minutes. Proceedings of the 2022 Pacific Vis. 2022.

Towards Design Guidelines for Effective Health-Related Data Videos: An Empirical Investigation of Affect, Personality, and Video Content

Sallam S, Sakamoto Y, Leboe-McGowan J, Latulipe C, Irani P. Towards Design Guidelines for Effective Health-Related Data Videos: An Empirical Investigation of Affect, Personality, and Video Content. Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems.

Persuasive Data Videos: Investigating Persuasive Self-Tracking Feedback with Augmented Data Videos

Choe, E. K., Sakamoto, Y., Fatmi, Y., Lee, B., Hurter, C., Haghshenas, A., & Irani, P. (2019). Persuasive data videos: Investigating persuasive self-tracking feedback with augmented data videos. In AMIA Annual Symposium Proceedings (Vol. 2019, p. 295). American Medical Informatics Association.

Hooked on data videos: assessing the effect of animation and pictographs on viewer engagement

Amini, F., Riche, N. H., Lee, B., Leboe-McGowan, J., & Irani, P. (2018, May). Hooked on data videos: assessing the effect of animation and pictographs on viewer engagement. In Proceedings of the 2018 International Conference on Advanced Visual Interfaces (pp. 1-9).

Evaluating Data-Driven Stories and Storytelling Tools

Amini, F., Brehmer, M., Bolduan, G., Elmer, C., & Wiederkehr, B. (2018). Evaluating data-driven stories and storytelling tools. In Data-driven storytelling (pp. 249-286). AK Peters/CRC Press

Data Representations for In-Situ Exploration of Health and Fitness Data

Amini, F., Hasan, K., Bunt, A., & Irani, P. (2017, May). Data representations for in-situ exploration of health and fitness data. In Proceedings of the 11th EAI international conference on pervasive computing technologies for healthcare (pp. 163-172).

Authoring Data-Driven Videos with DataClips

Amini, F., Riche, N. H., Lee, B., Monroy-Hernandez, A., & Irani, P. (2016). Authoring data-driven videos with dataclips. IEEE transactions on visualization and computer graphics, 23(1), 501-510.

Understanding Data Videos: Looking at Narrative Visualization through the Cinematography Lens

Amini, F., Henry Riche, N., Lee, B., Hurter, C., & Irani, P. (2015, April). Understanding data videos: Looking at narrative visualization through the cinematography lens. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 1459-1468).

Team Members

Pourang P. Irani

Professor Principal's Research Chair in Ubiquitous Analytics

University of British Columbia (Okanagan campus)

Room No: 304

Charles E. Fipke Centre for Innovative Research

3247 University Way

Kelowna, BC V1V 1V7

Email: pourang.irani@ubc.ca

I am a Professor in the Department of Computer Science at the University of British Columbia (Okanagan campus) and Principal's Research Chair in Ubiquitous Analytics. My research lies broadly in the areas of Human-Computer Interaction and Information Visualization. More specifically, our team is concentrating on designing and studying novel interactive systems for sensemaking "anywhere" and "anytime". For advancing this work we rely on mixed reality (MR) and wearable technologies for developing novel prototypes of visual interfaces and devices. I am also the Principal Investigator on an NSERC CREATE grant on Visual and Automated Disease Analytics. The aim of the VADA program is to train the next generation data scientists with a focus on health data analytics.